30 Design Strategies and Tactics from 40 Years of Investigation

Appendix: Further information and examples

Appropriate uses of computer and paper in assessment

Link to article

While computers are effective at presenting and scoring short-answer or multiple-choice questions, there is a tension between the desire for more valid forms of assessment (see ‘What You Test is What You Get’) and the lure of instant, simple and cheap computer-based assessment. The limits of computer-based testing are discussed at length in ISDDE (2012).

While technology has moved on somewhat since then, particularly with the availability of tablets with ‘free-form’ stylus input (or the simpler ability to take photos of written work) actually assessing such work is still an unsolved problem – or at least one that would turn every assessment task into a major, expensive development in machine learning. One issue is that computer provision in schools is still too variable to support the traditional model of a whole district, state or even nation taking the same ‘secret’ test together, leading to concepts such as ‘testing on demand’ and ‘adaptive testing’, requiring large banks of carefully aligned and calibrated test items that can be used to assemble unique but comparable assessment ‘papers’ on-the-fly. This, as well as absolutely requiring instant computer scoring, favors short, artificial, fragmented questions each targeted at a single mathematical concept. Calibration of richer, high-validity tasks is possible, but more complex and expensive.

Link to article

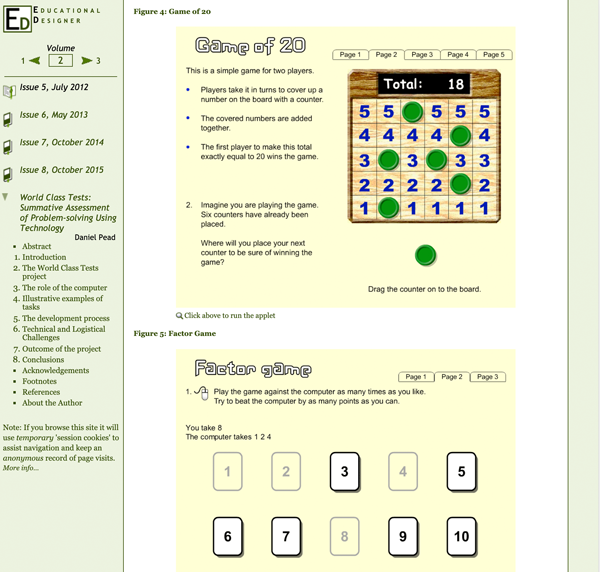

One alternative is to let computers play to their strengths – quickly but superficially assessing large swathes of curriculum with simple ‘single item’ questions, while also enabling richer questions to be presented by the computer but partially answered on paper and human-marked. One such project from the early 2000s is described by Pead (2012).

…a modern version of this would almost certainly look at the potential for using tablets/styluses/cameras to address the logistics of distributing paper and collecting responses. The key is the hybrid marking, with the richer responses assessed by humans.

Although these issues come to a head when computers are involved, the pressures for low-cost testing using multiple-choice forms or unskilled markers mechanistically applying simple rubrics apply to paper testing, too (Burkhardt, 2009).

References

Burkhardt, H. (2009) On Strategic Design. Educational Designer, 1(3). Retrieved from: http://www.educationaldesigner.org/ed/volume1/issue3/article9.

ISDDE (2012) Black, P., Burkhardt, H., Daro, P., Jones, I., Lappan, G., Pead, D., Stephens, M. High-stakes Examinations to Support Policy. Educational Designer, 2(5). Retrieved from: http://www.educationaldesigner.org/ed/volume2/issue5/article16/

Pead, D. (2012)

World Class Tests: Summative Assessment of Problem-solving Using Technology. Educational Designer, 2(5).

Retrieved from: http://www.educationaldesigner.org/ed/volume2/issue5/article18/